Primary keys and indexes in Hive query language is poosible or not?(Hive 查询语言中的主键和索引是否可行?)

问题描述

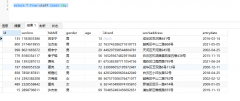

我们正在尝试将 oracle 表迁移到 hive 并对其进行处理.目前oracle中的表有primary key、foreign key和unique key约束.

We are trying to migrate oracle tables to hive and process them.

Currently the tables in oracle has primary key,foreign key and unique key constraints.

我们可以在 hive 中复制相同的内容吗?

Can we replicate the same in hive?

我们正在对如何实施它进行一些分析.

We are doing some analysis on how to implement it.

推荐答案

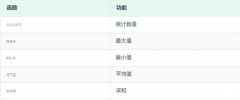

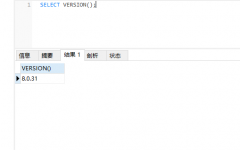

Hive 索引是在 Hive 0.7.0 (HIVE-417) 并在 Hive 3.0 中删除 (HIVE-18448) 请阅读这里的评论吉拉.该功能在 Hive 中完全没用.这些索引对于大数据来说太昂贵了,RIP.

Hive indexing was introduced in Hive 0.7.0 (HIVE-417) and removed in Hive 3.0 (HIVE-18448) Please read comments in this Jira. The feature was completely useless in Hive. These indexes was too expensive for big data, RIP.

从 Hive 2.1.0 开始(HIVE-13290)Hive 包括对未经验证的主键和外键约束的支持.这些约束未经过验证,上游系统需要在将数据加载到 Hive 之前确保数据完整性.这些约束对于生成 ER 图和查询的工具很有用.这种未经验证的约束也可用作自记录.如果表有这样的约束,你可以很容易地找出什么应该是 PK.

As of Hive 2.1.0 (HIVE-13290) Hive includes support for non-validated primary and foreign key constraints. These constraints are not validated, an upstream system needs to ensure data integrity before it is loaded into Hive. These constraints are useful for tools generating ER diagrams and queries. Also such non-validated constraints are useful as self-documenting. You can easily find out what is supposed to be a PK if the table has such constraint.

在 Oracle 数据库 Unique 中,PK 和 FK 约束有索引支持,因此它们可以快速工作并且非常有用.但这不是 Hive 的工作方式和设计目的.

In Oracle database Unique, PK and FK constraints are backed with indexes, so they can work fast and are really useful. But this is not how Hive works and what it was designed for.

很正常的情况是当您在 HDFS 中加载包含半结构化数据的非常大的文件时.在其上建立索引太昂贵,没有索引来检查 PK 违规只能扫描所有数据.通常你不能在 BigData 中强制执行约束.上游进程可以关心数据的完整性和一致性,但这并不能保证你最终不会在从不同来源加载的一些大表中的 Hive 中发生 PK 违规.

Quite normal scenario is when you loaded very big file with semi-structured data in HDFS. Building an index on it is too expensive and without index to check PK violation is possible only to scan all the data. And normally you cannot enforce constraints in BigData. Upstream process can take care about data integrity and consistency but this does not guarantee you finally will not have PK violation in Hive in some big table loaded from different sources.

某些文件存储格式,例如 ORC 具有内部轻量级索引"以加快速度-up 过滤和启用谓词下推 (PPD),使用此类索引不会实现 PK 和 FK 约束.这是无法做到的,因为通常您可以在 Hive 中拥有许多属于同一个表的此类文件,并且文件甚至可以具有不同的架构.为 PB 级创建的 Hive,您可以在单次运行中处理 PB 级,数据可以是半结构化的,文件可以有不同的架构.Hadoop 不支持随机写入,如果您想重建索引,这会增加更多的复杂性和成本.

Some file storage formats like ORC have internal light weight "indexes" to speed-up filtering and enabling predicate push down (PPD), no PK and FK constraints are implemented using such indexes. This cannot be done because normally you can have many such files belonging to the same table in Hive and files even can have different schemas. Hive created for petabytes and you can process petabytes in single run, data can be semi-structured, files can have different schemas. Hadoop does not support random writes and this adds more complications and cost if you want to rebuild indexes.

这篇关于Hive 查询语言中的主键和索引是否可行?的文章就介绍到这了,希望我们推荐的答案对大家有所帮助,也希望大家多多支持编程学习网!

本文标题为:Hive 查询语言中的主键和索引是否可行?

基础教程推荐

- 从字符串 TSQL 中获取数字 2021-01-01

- ORA-01830:日期格式图片在转换整个输入字符串之前结束/选择日期查询的总和 2021-01-01

- MySQL根据从其他列分组的值,对两列之间的值进行求和 2022-01-01

- 带有WHERE子句的LAG()函数 2022-01-01

- while 在触发器内循环以遍历 sql 中表的所有列 2022-01-01

- CHECKSUM 和 CHECKSUM_AGG:算法是什么? 2021-01-01

- MySQL 5.7参照时间戳生成日期列 2022-01-01

- 如何在 CakePHP 3 中实现 INSERT ON DUPLICATE KEY UPDATE aka upsert? 2021-01-01

- 带更新的 sqlite CTE 2022-01-01

- 使用 VBS 和注册表来确定安装了哪个版本和 32 位 2021-01-01