MySQL vs MongoDB 1000 reads(MySQL 与 MongoDB 1000 次读取)

问题描述

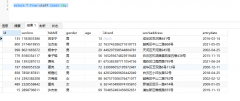

我对 MongoDb 感到非常兴奋,最近一直在测试它.我在 MySQL 中有一个名为 posts 的表,其中约有 2000 万条记录仅在名为id"的字段上建立索引.

I have been very excited about MongoDb and have been testing it lately. I had a table called posts in MySQL with about 20 million records indexed only on a field called 'id'.

我想将速度与 MongoDB 进行比较,我进行了一项测试,该测试将从我们庞大的数据库中随机获取并打印 15 条记录.我对 mysql 和 MongoDB 分别运行了大约 1,000 次查询,我很惊讶我没有注意到速度上的很大差异.也许 MongoDB 快 1.1 倍.这是非常令人失望的.有什么我做错了吗?我知道我的测试并不完美,但在阅读密集型杂务方面,MySQL 与 MongoDb 不相上下.

I wanted to compare speed with MongoDB and I ran a test which would get and print 15 records randomly from our huge databases. I ran the query about 1,000 times each for mysql and MongoDB and I am suprised that I do not notice a lot of difference in speed. Maybe MongoDB is 1.1 times faster. That's very disappointing. Is there something I am doing wrong? I know that my tests are not perfect but is MySQL on par with MongoDb when it comes to read intensive chores.

注意:

- 我有双核 +(2 个线程)i7 cpu 和 4GB 内存

- 我在 MySQL 上有 20 个分区,每个分区有 100 万条记录

用于测试 MongoDB 的示例代码

<?php

function microtime_float()

{

list($usec, $sec) = explode(" ", microtime());

return ((float)$usec + (float)$sec);

}

$time_taken = 0;

$tries = 100;

// connect

$time_start = microtime_float();

for($i=1;$i<=$tries;$i++)

{

$m = new Mongo();

$db = $m->swalif;

$cursor = $db->posts->find(array('id' => array('$in' => get_15_random_numbers())));

foreach ($cursor as $obj)

{

//echo $obj["thread_title"] . "<br><Br>";

}

}

$time_end = microtime_float();

$time_taken = $time_taken + ($time_end - $time_start);

echo $time_taken;

function get_15_random_numbers()

{

$numbers = array();

for($i=1;$i<=15;$i++)

{

$numbers[] = mt_rand(1, 20000000) ;

}

return $numbers;

}

?>

用于测试 MySQL 的示例代码

<?php

function microtime_float()

{

list($usec, $sec) = explode(" ", microtime());

return ((float)$usec + (float)$sec);

}

$BASE_PATH = "../src/";

include_once($BASE_PATH . "classes/forumdb.php");

$time_taken = 0;

$tries = 100;

$time_start = microtime_float();

for($i=1;$i<=$tries;$i++)

{

$db = new AQLDatabase();

$sql = "select * from posts_really_big where id in (".implode(',',get_15_random_numbers()).")";

$result = $db->executeSQL($sql);

while ($row = mysql_fetch_array($result) )

{

//echo $row["thread_title"] . "<br><Br>";

}

}

$time_end = microtime_float();

$time_taken = $time_taken + ($time_end - $time_start);

echo $time_taken;

function get_15_random_numbers()

{

$numbers = array();

for($i=1;$i<=15;$i++)

{

$numbers[] = mt_rand(1, 20000000);

}

return $numbers;

}

?>

推荐答案

MongoDB 并没有神奇地更快.如果您存储相同的数据,以基本相同的方式组织,并以完全相同的方式访问它,那么您真的不应该期望结果会有很大的不同.毕竟,MySQL 和 MongoDB 都是 GPL,所以如果 Mongo 中有一些神奇的更好的 IO 代码,那么 MySQL 团队可以将其合并到他们的代码库中.

MongoDB is not magically faster. If you store the same data, organised in basically the same fashion, and access it exactly the same way, then you really shouldn't expect your results to be wildly different. After all, MySQL and MongoDB are both GPL, so if Mongo had some magically better IO code in it, then the MySQL team could just incorporate it into their codebase.

人们看到真实世界的 MongoDB 性能主要是因为 MongoDB 允许您以更适合您的工作负载的不同方式进行查询.

People are seeing real world MongoDB performance largely because MongoDB allows you to query in a different manner that is more sensible to your workload.

例如,考虑一个设计,它以规范化的方式保留了有关复杂实体的大量信息.这可以轻松地使用 MySQL(或任何关系数据库)中的数十个表来以正常形式存储数据,需要许多索引来确保表之间的关系完整性.

For example, consider a design that persisted a lot of information about a complicated entity in a normalised fashion. This could easily use dozens of tables in MySQL (or any relational db) to store the data in normal form, with many indexes needed to ensure relational integrity between tables.

现在考虑使用文档存储的相同设计.如果所有这些相关表都从属于主表(而且它们经常是),那么您可能能够对数据进行建模,以便将整个实体存储在单个文档中.在 MongoDB 中,您可以将其作为单个文档存储在单个集合中.这就是 MongoDB 开始实现卓越性能的地方.

Now consider the same design with a document store. If all of those related tables are subordinate to the main table (and they often are), then you might be able to model the data such that the entire entity is stored in a single document. In MongoDB you can store this as a single document, in a single collection. This is where MongoDB starts enabling superior performance.

在 MongoDB 中,要检索整个实体,您必须执行:

In MongoDB, to retrieve the whole entity, you have to perform:

- 对集合进行一次索引查找(假设实体是通过 id 获取的)

- 检索一个数据库页面的内容(实际的二进制json文档)

所以是一个 b 树查找,和一个二进制页面读取.Log(n) + 1 个 IO.如果索引可以完全驻留在内存中,那么 1 个 IO.

So a b-tree lookup, and a binary page read. Log(n) + 1 IOs. If the indexes can reside entirely in memory, then 1 IO.

在有 20 个表的 MySQL 中,您必须执行:

In MySQL with 20 tables, you have to perform:

- 对根表进行一次索引查找(再次假设实体是通过 id 获取的)

- 使用聚集索引,我们可以假设根行的值在索引中

- 针对实体的 pk 值进行 20 多次范围查找(希望在索引上)

- 这些可能不是聚簇索引,因此一旦我们找出合适的子行是什么,同样需要进行 20 多次数据查找.

所以 mysql 的总数,即使假设所有索引都在内存中(这更难,因为它们的数量是内存的 20 倍)大约是 20 次范围查找.

So the total for mysql, even assuming that all indexes are in memory (which is harder since there are 20 times more of them) is about 20 range lookups.

这些范围查找可能由随机 IO 组成——不同的表肯定会驻留在磁盘上的不同位置,并且实体的同一表中同一范围内的不同行可能不连续(取决于实体已更新等).

These range lookups are likely comprised of random IO — different tables will definitely reside in different spots on disk, and it's possible that different rows in the same range in the same table for an entity might not be contiguous (depending on how the entity has been updated, etc).

因此,对于此示例,与 MongoDB 相比,MySQL 每次逻辑访问的最终 IO 大约是 20 倍.

So for this example, the final tally is about 20 times more IO with MySQL per logical access, compared to MongoDB.

这就是 MongoDB 在某些用例中提升性能的方式.

This is how MongoDB can boost performance in some use cases.

这篇关于MySQL 与 MongoDB 1000 次读取的文章就介绍到这了,希望我们推荐的答案对大家有所帮助,也希望大家多多支持编程学习网!

本文标题为:MySQL 与 MongoDB 1000 次读取

基础教程推荐

- 从字符串 TSQL 中获取数字 2021-01-01

- MySQL 5.7参照时间戳生成日期列 2022-01-01

- 使用 VBS 和注册表来确定安装了哪个版本和 32 位 2021-01-01

- while 在触发器内循环以遍历 sql 中表的所有列 2022-01-01

- CHECKSUM 和 CHECKSUM_AGG:算法是什么? 2021-01-01

- 带更新的 sqlite CTE 2022-01-01

- 如何在 CakePHP 3 中实现 INSERT ON DUPLICATE KEY UPDATE aka upsert? 2021-01-01

- ORA-01830:日期格式图片在转换整个输入字符串之前结束/选择日期查询的总和 2021-01-01

- 带有WHERE子句的LAG()函数 2022-01-01

- MySQL根据从其他列分组的值,对两列之间的值进行求和 2022-01-01