pyspark mysql jdbc load An error occurred while calling o23.load No suitable driver(pyspark mysql jdbc load 调用 o23.load 时发生错误 没有合适的驱动程序)

问题描述

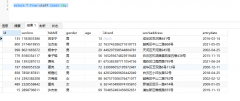

我在 Mac 上使用 docker image sequenceiq/spark 来研究这些spark examples,在学习过程中,我根据这个答案,当我启动Simple Data Operations 例子,这里是发生了什么:

I use docker image sequenceiq/spark on my Mac to study these spark examples, during the study process, I upgrade the spark inside that image to 1.6.1 according to this answer, and the error occurred when I start the Simple Data Operations example, here is what happened:

当我运行 df = sqlContext.read.format("jdbc").option("url",url).option("dbtable","people").load() 它引发错误,与pyspark控制台的完整堆栈如下:

when I run df = sqlContext.read.format("jdbc").option("url",url).option("dbtable","people").load() it raise a error, and the full stack with the pyspark console is as followed:

Python 2.6.6 (r266:84292, Jul 23 2015, 15:22:56)

[GCC 4.4.7 20120313 (Red Hat 4.4.7-11)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

16/04/12 22:45:28 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 1.6.1

/_/

Using Python version 2.6.6 (r266:84292, Jul 23 2015 15:22:56)

SparkContext available as sc, HiveContext available as sqlContext.

>>> url = "jdbc:mysql://localhost:3306/test?user=root;password=myPassWord"

>>> df = sqlContext.read.format("jdbc").option("url",url).option("dbtable","people").load()

16/04/12 22:46:05 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies)

16/04/12 22:46:06 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies)

16/04/12 22:46:11 WARN ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0

16/04/12 22:46:11 WARN ObjectStore: Failed to get database default, returning NoSuchObjectException

16/04/12 22:46:16 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies)

16/04/12 22:46:17 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/spark/python/pyspark/sql/readwriter.py", line 139, in load

return self._df(self._jreader.load())

File "/usr/local/spark/python/lib/py4j-0.9-src.zip/py4j/java_gateway.py", line 813, in __call__

File "/usr/local/spark/python/pyspark/sql/utils.py", line 45, in deco

return f(*a, **kw)

File "/usr/local/spark/python/lib/py4j-0.9-src.zip/py4j/protocol.py", line 308, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling o23.load.

: java.sql.SQLException: No suitable driver

at java.sql.DriverManager.getDriver(DriverManager.java:278)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anonfun$2.apply(JdbcUtils.scala:50)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anonfun$2.apply(JdbcUtils.scala:50)

at scala.Option.getOrElse(Option.scala:120)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.createConnectionFactory(JdbcUtils.scala:49)

at org.apache.spark.sql.execution.datasources.jdbc.JDBCRDD$.resolveTable(JDBCRDD.scala:120)

at org.apache.spark.sql.execution.datasources.jdbc.JDBCRelation.<init>(JDBCRelation.scala:91)

at org.apache.spark.sql.execution.datasources.jdbc.DefaultSource.createRelation(DefaultSource.scala:57)

at org.apache.spark.sql.execution.datasources.ResolvedDataSource$.apply(ResolvedDataSource.scala:158)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:119)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:231)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:381)

at py4j.Gateway.invoke(Gateway.java:259)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:133)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:209)

at java.lang.Thread.run(Thread.java:744)

>>>

这是我迄今为止尝试过的:

Here is what I have tried till now:

下载

mysql-connector-java-5.0.8-bin.jar,放入/usr/local/spark/lib/.还是一样的错误.

Download

mysql-connector-java-5.0.8-bin.jar, and put it in to/usr/local/spark/lib/. It still the same error.

像这样创建t.py:

from pyspark import SparkContext

from pyspark.sql import SQLContext

sc = SparkContext(appName="PythonSQL")

sqlContext = SQLContext(sc)

df = sqlContext.read.format("jdbc").option("url",url).option("dbtable","people").load()

df.printSchema()

countsByAge = df.groupBy("age").count()

countsByAge.show()

countsByAge.write.format("json").save("file:///usr/local/mysql/mysql-connector-java-5.0.8/db.json")

然后,我尝试了 spark-submit --conf spark.executor.extraClassPath=mysql-connector-java-5.0.8-bin.jar --driver-class-path mysql-connector-java-5.0.8-bin.jar --jars mysql-connector-java-5.0.8-bin.jar --master local[4] t.py.结果还是一样.

then, I tried spark-submit --conf spark.executor.extraClassPath=mysql-connector-java-5.0.8-bin.jar --driver-class-path mysql-connector-java-5.0.8-bin.jar --jars mysql-connector-java-5.0.8-bin.jar --master local[4] t.py. The result is still the same.

- 然后我尝试了

pyspark --conf spark.executor.extraClassPath=mysql-connector-java-5.0.8-bin.jar --driver-class-path mysql-connector-java-5.0.8-bin.jar --jars mysql-connector-java-5.0.8-bin.jar --master local[4] t.py,有和没有下面的t.py,还是一样.

- Then I tried

pyspark --conf spark.executor.extraClassPath=mysql-connector-java-5.0.8-bin.jar --driver-class-path mysql-connector-java-5.0.8-bin.jar --jars mysql-connector-java-5.0.8-bin.jar --master local[4] t.py, both with and without the followingt.py, still the same.

在此期间,mysql 正在运行.这是我的操作系统信息:

During all of this, the mysql is running. And here is my os info:

# rpm --query centos-release

centos-release-6-5.el6.centos.11.2.x86_64

hadoop 版本是 2.6.

And the hadoop version is 2.6.

现在不知道下一步该去哪里,希望有大神帮忙指点一下,谢谢!

Now I don't where to go next, so I hope some one can help give some advice, thanks!

推荐答案

当我尝试将脚本写入 MySQL 时,我遇到了java.sql.SQLException:没有合适的驱动程序".

I ran into "java.sql.SQLException: No suitable driver" when I tried to have my script write to MySQL.

这是我为解决这个问题所做的.

Here's what I did to fix that.

在 script.py 中

In script.py

df.write.jdbc(url="jdbc:mysql://localhost:3333/my_database"

"?user=my_user&password=my_password",

table="my_table",

mode="append",

properties={"driver": 'com.mysql.jdbc.Driver'})

然后我以这种方式运行 spark-submit

Then I ran spark-submit this way

SPARK_HOME=/usr/local/Cellar/apache-spark/1.6.1/libexec spark-submit --packages mysql:mysql-connector-java:5.1.39 ./script.py

请注意,SPARK_HOME 特定于安装 spark 的位置.对于您的环境,这个 https://github.com/sequenceiq/docker-spark/blob/master/README.md 可能会有所帮助.

Note that SPARK_HOME is specific to where spark is installed. For your environment this https://github.com/sequenceiq/docker-spark/blob/master/README.md might help.

如果以上所有内容都令人困惑,请尝试以下操作:

在 t.py 中替换

In case all the above is confusing, try this:

In t.py replace

sqlContext.read.format("jdbc").option("url",url).option("dbtable","people").load()

与

sqlContext.read.format("jdbc").option("dbtable","people").option("driver", 'com.mysql.jdbc.Driver').load()

然后运行

spark-submit --packages mysql:mysql-connector-java:5.1.39 --master local[4] t.py

这篇关于pyspark mysql jdbc load 调用 o23.load 时发生错误 没有合适的驱动程序的文章就介绍到这了,希望我们推荐的答案对大家有所帮助,也希望大家多多支持编程学习网!

本文标题为:pyspark mysql jdbc load 调用 o23.load 时发生错误 没有合

基础教程推荐

- 从字符串 TSQL 中获取数字 2021-01-01

- 带更新的 sqlite CTE 2022-01-01

- 如何在 CakePHP 3 中实现 INSERT ON DUPLICATE KEY UPDATE aka upsert? 2021-01-01

- MySQL 5.7参照时间戳生成日期列 2022-01-01

- 使用 VBS 和注册表来确定安装了哪个版本和 32 位 2021-01-01

- MySQL根据从其他列分组的值,对两列之间的值进行求和 2022-01-01

- ORA-01830:日期格式图片在转换整个输入字符串之前结束/选择日期查询的总和 2021-01-01

- 带有WHERE子句的LAG()函数 2022-01-01

- while 在触发器内循环以遍历 sql 中表的所有列 2022-01-01

- CHECKSUM 和 CHECKSUM_AGG:算法是什么? 2021-01-01