SQLite Performance Benchmark -- why is :memory: so slow...only 1.5X as fast as disk?(SQLite 性能基准测试——为什么 :memory: 这么慢……只有磁盘的 1.5 倍?)

问题描述

我一直在尝试查看使用内存中的 sqlite 与基于磁盘的 sqlite 是否有任何性能改进.基本上,我想以启动时间和内存为代价来获得极快的查询,而这些查询在应用程序运行过程中不会 访问磁盘.

I've been trying to see if there are any performance improvements gained by using in-memory sqlite vs. disk based sqlite. Basically I'd like to trade startup time and memory to get extremely rapid queries which do not hit disk during the course of the application.

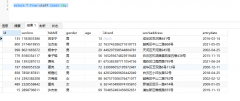

但是,以下基准测试仅使我的速度提高了 1.5 倍.在这里,我生成 100 万行随机数据并将其加载到同一个表的基于磁盘和内存的版本中.然后我在两个数据库上运行随机查询,返回大小约为 300k 的集合.我预计基于内存的版本会快得多,但如前所述,我只获得了 1.5 倍的加速.

However, the following benchmark gives me only a factor of 1.5X in improved speed. Here, I'm generating 1M rows of random data and loading it into both a disk and memory based version of the same table. I then run random queries on both dbs, returning sets of size approx 300k. I expected the memory based version to be considerably faster, but as mentioned I'm only getting 1.5X speedups.

我尝试了其他几种大小的数据库和查询集;:memory: does 的优势似乎随着数据库中行数的增加而增加.我不确定为什么优势如此之小,但我有一些假设:

I experimented with several other sizes of dbs and query sets; the advantage of :memory: does seem to go up as the number of rows in the db increases. I'm not sure why the advantage is so small, though I had a few hypotheses:

- 所使用的表不够大(按行),无法使 :memory:大赢家

- 更多的连接/表将使 :memory: 优势更加明显

- 在连接或操作系统级别进行了某种缓存,以便可以以某种方式访问先前的结果,从而破坏了基准

- 存在某种我没有看到的隐藏磁盘访问(我还没有尝试过 lsof,但我确实关闭了 PRAGMA 以进行日志记录)

我在这里做错了吗?关于为什么 :memory: 没有产生近乎即时的查找的任何想法?这是基准:

Am I doing something wrong here? Any thoughts on why :memory: isn't producing nearly instant lookups? Here's the benchmark:

==> sqlite_memory_vs_disk_benchmark.py <==

#!/usr/bin/env python

"""Attempt to see whether :memory: offers significant performance benefits.

"""

import os

import time

import sqlite3

import numpy as np

def load_mat(conn,mat):

c = conn.cursor()

#Try to avoid hitting disk, trading safety for speed.

#http://stackoverflow.com/questions/304393

c.execute('PRAGMA temp_store=MEMORY;')

c.execute('PRAGMA journal_mode=MEMORY;')

# Make a demo table

c.execute('create table if not exists demo (id1 int, id2 int, val real);')

c.execute('create index id1_index on demo (id1);')

c.execute('create index id2_index on demo (id2);')

for row in mat:

c.execute('insert into demo values(?,?,?);', (row[0],row[1],row[2]))

conn.commit()

def querytime(conn,query):

start = time.time()

foo = conn.execute(query).fetchall()

diff = time.time() - start

return diff

#1) Build some fake data with 3 columns: int, int, float

nn = 1000000 #numrows

cmax = 700 #num uniques in 1st col

gmax = 5000 #num uniques in 2nd col

mat = np.zeros((nn,3),dtype='object')

mat[:,0] = np.random.randint(0,cmax,nn)

mat[:,1] = np.random.randint(0,gmax,nn)

mat[:,2] = np.random.uniform(0,1,nn)

#2) Load it into both dbs & build indices

try: os.unlink('foo.sqlite')

except OSError: pass

conn_mem = sqlite3.connect(":memory:")

conn_disk = sqlite3.connect('foo.sqlite')

load_mat(conn_mem,mat)

load_mat(conn_disk,mat)

del mat

#3) Execute a series of random queries and see how long it takes each of these

numqs = 10

numqrows = 300000 #max number of ids of each kind

results = np.zeros((numqs,3))

for qq in range(numqs):

qsize = np.random.randint(1,numqrows,1)

id1a = np.sort(np.random.permutation(np.arange(cmax))[0:qsize]) #ensure uniqueness of ids queried

id2a = np.sort(np.random.permutation(np.arange(gmax))[0:qsize])

id1s = ','.join([str(xx) for xx in id1a])

id2s = ','.join([str(xx) for xx in id2a])

query = 'select * from demo where id1 in (%s) AND id2 in (%s);' % (id1s,id2s)

results[qq,0] = round(querytime(conn_disk,query),4)

results[qq,1] = round(querytime(conn_mem,query),4)

results[qq,2] = int(qsize)

#4) Now look at the results

print " disk | memory | qsize"

print "-----------------------"

for row in results:

print "%.4f | %.4f | %d" % (row[0],row[1],row[2])

这是结果.请注意,对于相当广泛的查询大小,磁盘占用的内存大约是内存的 1.5 倍.

Here's the results. Note that disk takes about 1.5X as long as memory for a fairly wide range of query sizes.

[ramanujan:~]$python -OO sqlite_memory_vs_disk_clean.py

disk | memory | qsize

-----------------------

9.0332 | 6.8100 | 12630

9.0905 | 6.6953 | 5894

9.0078 | 6.8384 | 17798

9.1179 | 6.7673 | 60850

9.0629 | 6.8355 | 94854

8.9688 | 6.8093 | 17940

9.0785 | 6.6993 | 58003

9.0309 | 6.8257 | 85663

9.1423 | 6.7411 | 66047

9.1814 | 6.9794 | 11345

相对于磁盘,RAM 不应该几乎是即时的吗?这里出了什么问题?

Shouldn't RAM be almost instant relative to disk? What's going wrong here?

这里有一些很好的建议.

Some good suggestions here.

我想对我来说最重要的一点是**可能没有办法让 :memory: 绝对更快,但有一种方法可以使磁盘访问相对较慢. **

I guess the main takehome point for me is that **there's probably no way to make :memory: absolutely faster, but there is a way to make disk access relatively slower. **

换句话说,基准测试充分衡量了内存的实际性能,而不是磁盘的实际性能(例如,因为 cache_size pragma 太大或因为我没有进行写入).当我有机会时,我会处理这些参数并发布我的发现.

In other words, the benchmark is adequately measuring the realistic performance of memory, but not the realistic performance of disk (e.g. because the cache_size pragma is too big or because I'm not doing writes). I'll mess around with those parameters and post my findings when I get a chance.

也就是说,如果有人认为我可以从内存数据库中挤出更多速度(除了通过提升 cache_size 和 default_cache_size,我会这样做),我全神贯注......

That said, if there is anyone who thinks I can squeeze some more speed out of the in-memory db (other than by jacking up the cache_size and default_cache_size, which I will do), I'm all ears...

推荐答案

这与 SQLite 具有页面缓存这一事实有关.根据文档,默认的页面缓存是 2000 1K 页或大约 2Mb.由于这大约是您数据的 75% 到 90%,因此这两个数字非常相似也就不足为奇了.我的猜测是除了 SQLite 页面缓存之外,其余数据仍在 OS 磁盘缓存中.如果您让 SQLite 刷新页面缓存(和磁盘缓存),您会看到一些非常显着的差异.

It has to do with the fact that SQLite has a page cache. According to the Documentation, the default page cache is 2000 1K pages or about 2Mb. Since this is about 75% to 90% of your data, it isn't surprising that the two number are very similar. My guess is that in addition to the SQLite page cache, the rest of the data is still in the OS disk cache. If you got SQLite to flush the page cache (and the disk cache) you would see some really significant differences.

这篇关于SQLite 性能基准测试——为什么 :memory: 这么慢……只有磁盘的 1.5 倍?的文章就介绍到这了,希望我们推荐的答案对大家有所帮助,也希望大家多多支持编程学习网!

本文标题为:SQLite 性能基准测试——为什么 :memory: 这么慢……只有磁盘的 1.5 倍?

基础教程推荐

- CHECKSUM 和 CHECKSUM_AGG:算法是什么? 2021-01-01

- MySQL根据从其他列分组的值,对两列之间的值进行求和 2022-01-01

- 如何在 CakePHP 3 中实现 INSERT ON DUPLICATE KEY UPDATE aka upsert? 2021-01-01

- 从字符串 TSQL 中获取数字 2021-01-01

- MySQL 5.7参照时间戳生成日期列 2022-01-01

- while 在触发器内循环以遍历 sql 中表的所有列 2022-01-01

- ORA-01830:日期格式图片在转换整个输入字符串之前结束/选择日期查询的总和 2021-01-01

- 使用 VBS 和注册表来确定安装了哪个版本和 32 位 2021-01-01

- 带更新的 sqlite CTE 2022-01-01

- 带有WHERE子句的LAG()函数 2022-01-01