Python multiprocessing and database access with pyodbc quot;is not safequot;?(使用pyodbc“不安全的Python多处理和数据库访问?)

问题描述

问题:

我收到以下回溯,但不明白它的含义或如何解决它:

I am getting the following traceback and don't understand what it means or how to fix it:

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "C:Python26libmultiprocessingforking.py", line 342, in main

self = load(from_parent)

File "C:Python26libpickle.py", line 1370, in load

return Unpickler(file).load()

File "C:Python26libpickle.py", line 858, in load

dispatch[key](self)

File "C:Python26libpickle.py", line 1083, in load_newobj

obj = cls.__new__(cls, *args)

TypeError: object.__new__(pyodbc.Cursor) is not safe, use pyodbc.Cursor.__new__()

情况:

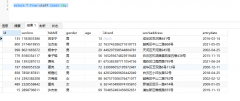

我有一个充满待处理数据的 SQL Server 数据库.我正在尝试使用多处理模块来并行化工作并利用我计算机上的多个内核.我的一般类结构如下:

I've got a SQL Server database full of data to be processed. I'm trying to use the multiprocessing module to parallelize the work and take advantage of the multiple cores on my computer. My general class structure is as follows:

- MyManagerClass

- 这是程序开始的主类.

- 它创建了两个 multiprocessing.Queue 对象,一个

work_queue和一个write_queue - 它还会创建并启动其他进程,然后等待它们完成.

- 注意:这不是 multiprocessing.managers.BaseManager() 的扩展

- MyManagerClass

- This is the main class, where the program starts.

- It creates two multiprocessing.Queue objects, one

work_queueand onewrite_queue - It also creates and launches the other processes, then waits for them to finish.

- NOTE: this is not an extension of multiprocessing.managers.BaseManager()

- 该类从 SQL Server 数据库中读取数据.

- 它将项目放入

work_queue.

- 这是工作处理发生的地方.

- 它从

work_queue获取项目并将完成的项目放入write_queue.

- This is where the work processing happens.

- It gets items from the

work_queueand puts completed items in thewrite_queue.

- 该类负责将处理后的数据写回 SQL Server 数据库.

- 它从

write_queue中获取项目.

- This class is in charge of writing the processed data back to the SQL Server database.

- It gets items from the

write_queue.

这个想法是,将有一名经理、一名读者、一名作家和许多工人.

The idea is that there will be one manager, one reader, one writer, and many workers.

其他详情:

我在 stderr 中获得了两次回溯,所以我认为它发生在读者和作者一次.我的工作进程创建得很好,但只是坐在那里,直到我发送一个 KeyboardInterrupt,因为它们在

work_queue中没有任何内容.I get the traceback twice in stderr, so I'm thinking that it happens once for the reader and once for the writer. My worker processes get created fine, but just sit there until I send a KeyboardInterrupt because they have nothing in the

work_queue.读取器和写入器都有自己的数据库连接,在初始化时创建.

Both the reader and writer have their own connection to the database, created on initialization.

解决方案:

感谢 Mark 和 Ferdinand Beyer 提供的答案和问题导致了这个解决方案.他们正确地指出,Cursor 对象不是pickle-able",这是多处理用于在进程之间传递信息的方法.

Thanks to Mark and Ferdinand Beyer for their answers and questions that led to this solution. They rightfully pointed out that the Cursor object is not "pickle-able", which is the method that multiprocessing uses to pass information between processes.

我的代码的问题是

MyReaderClass(multiprocessing.Process)和MyWriterClass(multiprocessing.Process)都在它们的__init__() 中连接到数据库方法.我在MyManagerClass中创建了这两个对象(即调用它们的 init 方法),然后调用start().The issue with my code was that

MyReaderClass(multiprocessing.Process)andMyWriterClass(multiprocessing.Process)both connected to the database in their__init__()methods. I created both these objects (i.e. called their init method) inMyManagerClass, then calledstart().所以它会创建连接和游标对象,然后尝试通过pickle将它们发送到子进程.我的解决方案是将连接和游标对象的实例化移动到 run() 方法,直到子进程完全创建后才会调用.

So it would create the connection and cursor objects, then try to send them to the child process via pickle. My solution was to move the instantiation of the connection and cursor objects to the run() method, which isn't called until the child process is fully created.

推荐答案

多处理依赖于酸洗来在进程之间通信对象.pyodbc 连接和游标对象不能被pickle.

Multiprocessing relies on pickling to communicate objects between processes. The pyodbc connection and cursor objects can not be pickled.

>>> cPickle.dumps(aCursor) Traceback (most recent call last): File "<stdin>", line 1, in <module> File "/usr/lib64/python2.5/copy_reg.py", line 69, in _reduce_ex raise TypeError, "can't pickle %s objects" % base.__name__ TypeError: can't pickle Cursor objects >>> cPickle.dumps(dbHandle) Traceback (most recent call last): File "<stdin>", line 1, in <module> File "/usr/lib64/python2.5/copy_reg.py", line 69, in _reduce_ex raise TypeError, "can't pickle %s objects" % base.__name__ TypeError: can't pickle Connection objects它将项目放入工作队列",什么项目?光标对象是否也有可能被传递?

"It puts items in the work_queue", what items? Is it possible the cursor object is getting passed as well?

这篇关于使用pyodbc“不安全"的Python多处理和数据库访问?的文章就介绍到这了,希望我们推荐的答案对大家有所帮助,也希望大家多多支持编程学习网!

本文标题为:使用pyodbc“不安全"的Python多处理和数据库访问?

基础教程推荐

- 使用 VBS 和注册表来确定安装了哪个版本和 32 位 2021-01-01

- MySQL 5.7参照时间戳生成日期列 2022-01-01

- ORA-01830:日期格式图片在转换整个输入字符串之前结束/选择日期查询的总和 2021-01-01

- 带更新的 sqlite CTE 2022-01-01

- 带有WHERE子句的LAG()函数 2022-01-01

- MySQL根据从其他列分组的值,对两列之间的值进行求和 2022-01-01

- 如何在 CakePHP 3 中实现 INSERT ON DUPLICATE KEY UPDATE aka upsert? 2021-01-01

- while 在触发器内循环以遍历 sql 中表的所有列 2022-01-01

- CHECKSUM 和 CHECKSUM_AGG:算法是什么? 2021-01-01

- 从字符串 TSQL 中获取数字 2021-01-01