Optimal MySQL settings for queries that deliver large amounts of data?(用于提供大量数据的查询的最佳 MySQL 设置?)

问题描述

我是一名科学家,我使用 MySQL 作为我的数值模拟结果的存储.通常我有一组通过我的实验获得的数据和一个控制组.这两个数据集存储在一张表中.一个指标字段告诉我记录是来自实验还是来自控制集.这个表通常有大约 1 亿条记录.5000 万次实验和 5000 万次对照.

I work as a scientist and I have used MySQL as a storage for the results of my numerical simulations. Typically I have a set of data obtained by my experiment and a control set. These two data sets are stored in one table. One indicator field tells me if a record comes from experiment or from a control set. This table usually has ~ 100 million records. 50million experiments and 50 million controls.

当我对数据进行后处理时,我的典型任务包括首先发出以下两个查询:

When I do the post processing of my data my typical task consists of first issuing the following two queries:

select b0,t0 from results_1mregr_c_ew_f where RC='E' and df>60 /// getting experiments data

和

select b0,t0 from results_1mregr_c_ew_f where RC='C' and df>60 /// getting controls data

我在 RC,df 上有一个多列索引.这些查询需要大量时间,并且查询花费大部分时间发送数据"

I have a multi column index on RC,df. These queries take lots of time and the queries spend most of the time "Sending data"

我在具有 12GB RAM 的 8 核 MacPro 上运行它.我是这台机器的单一用户,这项任务是主要任务,因此我可以将所有 RAM 专用于 MySQL.所有表都是 MyISAM(如果可以提高查询速度,我可以转换它们).

I'm running this on 8core MacPro with 12GB of RAM. I'm a single user of this machine and this task is the primary task therefore I can dedicate all the RAM to MySQL. All tables are MyISAM (I can convert them if that would increase teh speed of my queries).

如果您有任何关于如何加快这些查询速度的建议,我将不胜感激.我是否应该更改一些设置、索引、查询....

I would appreciate any recommendations on how to speed up these queries. Should I change some settings, indices, queries....

在这些查询中的每一个中,我都希望得到大约 5000 万条记录.请注意,由于管理原因,不能将表拆分为两个表,一个包含实验,一个包含对照观察.

In each of these queries I expect to get back ~ 50 million records. Note that splitting the table into two tables one containing experimental and one containing control observation is not an option due to administrative reasons.

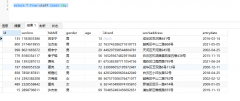

这里是输出:

explain select b0, t0 from results_1mregr_c_ew_f where RC="C" and df>60

+----+-----------+---------------------+-----+-------------+---+-------+----+-------+-----------+

| id |select_type|table |type |possible_keys|key|key_len|ref |rows |Extra |

+----+-----------+---------------------+-----+-------------+---+-------+----+-------+-----------+

| 1 |SIMPLE |results_1mregr_c_ew_f|range|ff |ff |11 |NULL|6251121|Using where|

+----+-----------+---------------------+-----+-------------+---+-------+----+-------+-----------+

输出如下:

show indexes from results_1mregr_c_ew_f;

+-----------------------+------------+----------+--------------+-------------+-----------+-------------+----------+--------+------+------------+---------+

| Table | Non_unique | Key_name | Seq_in_index | Column_name | Collation | Cardinality | Sub_part | Packed | Null | Index_type | Comment |

+-----------------------+------------+----------+--------------+-------------+-----------+-------------+----------+--------+------+------------+---------+

| results_1mregr_c_ew_f | 0 | PRIMARY | 1 | id | A | 50793996 | NULL | NULL | | BTREE | |

| results_1mregr_c_ew_f | 1 | ff | 1 | RC | A | 3 | NULL | NULL | | BTREE | |

| results_1mregr_c_ew_f | 1 | ff | 2 | df | A | 120 | NULL | NULL | | BTREE | |

+-----------------------+------------+----------+--------------+-------------+-----------+-------------+----------+--------+------+------------+---------+

输出如下:

CREATE TABLE `results_1mregr_c_ew_f` (

`b0` double NOT NULL COMMENT ' ',

`s0` double NOT NULL,

`t0` double NOT NULL,

`b1` double NOT NULL,

`s1` double NOT NULL,

`t1` double NOT NULL,

`b2` double NOT NULL,

`s2` double NOT NULL,

`t2` double NOT NULL,

`b3` double NOT NULL,

`s3` double NOT NULL,

`t3` double NOT NULL,

`b4` double NOT NULL,

`s4` double NOT NULL,

`t4` double NOT NULL,

`AD` char(4) NOT NULL,

`chisq` double NOT NULL,

`RC` char(7) NOT NULL,

`colq` varchar(255) NOT NULL,

`df` int(11) NOT NULL,

`ncol` int(11) NOT NULL,

`id` int(10) unsigned NOT NULL AUTO_INCREMENT,

`p1` float NOT NULL,

`p2` float NOT NULL,

`p3` float NOT NULL,

`p4` float NOT NULL,

PRIMARY KEY (`id`),

KEY `ff` (`RC`,`df`)

) ENGINE=MyISAM AUTO_INCREMENT=50793997 DEFAULT CHARSET=ascii |

推荐答案

当我可以在类似硬件上在 60 秒内完成同样的事情时,你的查询需要 2 小时才能执行,这肯定是严重错误.

Something has to be seriously wrong for your query to be taking 2 hours to execute when I can do the same thing in under 60 seconds on similar hardware.

>

以下某些内容可能会有所帮助...

Some of the following might prove helpful...

检查您的服务器配置并进行相应优化.以下一些资源应该有用.

Check your server configuration and optimise accordingly. Some of the following resources should be useful.

- http://www.mysqlperformanceblog.com/2006/09/29/what-to-tune-in-mysql-server-after-installation/

- http://www.mysqlperformanceblog.com/

- http://www.highperfmysql.com/

- http://forge.mysql.com/wiki/ServerVariables

- http://dev.mysql.com/doc/refman/5.0/en/server-system-variables.html

- http://www.xaprb.com/blog/2006/07/04/how-to-exploit-mysql-index-optimizations/

- http://jpipes.com/presentations/perf_tuning_best_practices.pdf

- http://jpipes.com/presentations/index_coding_optimization.pdf

- http://www.jasny.net/?p=36

现在是不太明显的...

Now for the less obvious...

为什么不在 MySQL 内部处理所有数据,这样您就不必向应用层发送大量数据?以下示例使用游标在 2 分钟内循环并处理服务器端 50M 行.我不是游标的忠实粉丝,尤其是在它们非常有限的 MySQL 中,但我猜你会循环结果集并进行某种形式的数值分析,因此在这种情况下使用游标是合理的.

Why not process all the data inside of MySQL so you don't have to send vast quantities of data to your application layer ? The following example uses a cursor to loop and process 50M rows server side in under 2 minutes. I'm not a huge fan of cursors, especially in MySQL where they are very limited, but I'm guessing you'd be looping the resultset and doing some form of numerical analysis so use of a cursor is justifiable in this case.

简化的 myisam 结果表 - 基于您的键.

drop table if exists results_1mregr_c_ew_f;

create table results_1mregr_c_ew_f

(

id int unsigned not null auto_increment primary key,

rc tinyint unsigned not null,

df int unsigned not null default 0,

val double(10,4) not null default 0,

ts timestamp not null default now(),

key (rc, df)

)

engine=myisam;

我生成了 100M 行数据,其中关键字段的基数与您的示例中的基数大致相同:

I generated 100M rows of data with the key fields having approximately the same cardinality as in your example:

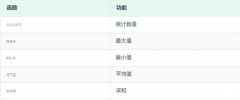

show indexes from results_1mregr_c_ew_f;

Table Non_unique Key_name Seq_in_index Column_name Collation Cardinality Index_type

===== ========== ======== ============ =========== ========= =========== ==========

results_1mregr_c_ew_f 0 PRIMARY 1 id A 100000000 BTREE

results_1mregr_c_ew_f 1 rc 1 rc A 2 BTREE

results_1mregr_c_ew_f 1 rc 2 df A 223 BTREE

存储过程

我创建了一个简单的存储过程来获取所需的数据并对其进行处理(使用与示例相同的 where 条件)

I created a simple stored procedure that fetches the required data and processes it (uses same where condition as your example)

drop procedure if exists process_results_1mregr_c_ew_f;

delimiter #

create procedure process_results_1mregr_c_ew_f

(

in p_rc tinyint unsigned,

in p_df int unsigned

)

begin

declare v_count int unsigned default 0;

declare v_done tinyint default 0;

declare v_id int unsigned;

declare v_result_cur cursor for select id from results_1mregr_c_ew_f where rc = p_rc and df > p_df;

declare continue handler for not found set v_done = 1;

open v_result_cur;

repeat

fetch v_result_cur into v_id;

set v_count = v_count + 1;

-- do work...

until v_done end repeat;

close v_result_cur;

select v_count as counter;

end #

delimiter ;

观察到以下运行时:

call process_results_1mregr_c_ew_f(0,60);

runtime 1 = 03:24.999 Query OK (3 mins 25 secs)

runtime 2 = 03:32.196 Query OK (3 mins 32 secs)

call process_results_1mregr_c_ew_f(1,60);

runtime 1 = 04:59.861 Query OK (4 mins 59 secs)

runtime 2 = 04:41.814 Query OK (4 mins 41 secs)

counter

========

23000002 (23 million rows processed in each case)

嗯,表现有点令人失望,所以进入下一个想法.

Hmmmm, performance a bit disappointing so onto the next idea.

为什么是innodb ??因为它有聚集索引!您会发现使用 innodb 插入速度较慢,但希望读取速度会更快,因此这是值得的权衡.

Why innodb ?? because it has clustered indexes ! You will find inserting slower using innodb but hopefully it will be faster to read so it's a trade off that might be worth it.

通过聚集索引访问一行很快,因为行数据在索引搜索引导的同一页上.如果表很大,与使用与索引记录不同的页面存储行数据的存储组织相比,聚簇索引体系结构通常可以节省磁盘 I/O 操作.例如,MyISAM 使用一个文件数据行和另一个用于索引记录.

Accessing a row through the clustered index is fast because the row data is on the same page where the index search leads. If a table is large, the clustered index architecture often saves a disk I/O operation when compared to storage organizations that store row data using a different page from the index record. For example, MyISAM uses one file for data rows and another for index records.

更多信息在这里:

- http://dev.mysql.com/doc/refman/5.0/en/innodb-index-types.html

简化的innodb结果表

drop table if exists results_innodb;

create table results_innodb

(

rc tinyint unsigned not null,

df int unsigned not null default 0,

id int unsigned not null, -- cant auto_inc this !!

val double(10,4) not null default 0,

ts timestamp not null default now(),

primary key (rc, df, id) -- note clustered (innodb only !) composite PK

)

engine=innodb;

innodb 的一个问题是它不支持构成复合键一部分的 auto_increment 字段,因此您必须使用序列生成器、触发器或其他一些方法自己提供递增的键值 - 也许在填充结果表本身??

One problem with innodb is that is doesnt support auto_increment fields that form part of a composite key so you'd have to provide the incrementing key value yourself using a sequence generator, trigger or some other method - perhaps in the application populating the result table itself ??

同样,我生成了 100M 行数据,其中关键字段的基数与您的示例中的基数大致相同.如果这些数字与 myisam 示例不匹配,请不要担心,因为 innodb 会估计基数,因此它们不会完全相同.(但它们是 - 使用相同的数据集)

Again, I generated 100M rows of data with the key fields having approximately the same cardinality as in your example. Don't worry if these figures don't match the myisam example as innodb estimates the cardinalities so they wont be exactly the same. (but they are - same dataset used)

show indexes from results_innodb;

Table Non_unique Key_name Seq_in_index Column_name Collation Cardinality Index_type

===== ========== ======== ============ =========== ========= =========== ==========

results_innodb 0 PRIMARY 1 rc A 18 BTREE

results_innodb 0 PRIMARY 2 df A 18 BTREE

results_innodb 0 PRIMARY 3 id A 100000294 BTREE

存储过程

存储过程与上面的 myisam 示例完全相同,只是从 innodb 表中选择数据.

The stored procedure is exactly the same as the myisam example above but selects data from the innodb table instead.

declare v_result_cur cursor for select id from results_innodb where rc = p_rc and df > p_df;

结果如下:

call process_results_innodb(0,60);

runtime 1 = 01:53.407 Query OK (1 mins 53 secs)

runtime 2 = 01:52.088 Query OK (1 mins 52 secs)

call process_results_innodb(1,60);

runtime 1 = 02:01.201 Query OK (2 mins 01 secs)

runtime 2 = 01:49.737 Query OK (1 mins 50 secs)

counter

========

23000002 (23 million rows processed in each case)

比 myisam 引擎实现快约 2-3 分钟!(innodb FTW)

approx 2-3 mins faster than the myisam engine implementation ! (innodb FTW)

在使用游标的服务器端存储过程中处理结果可能不是最佳解决方案,尤其是因为 MySQL 不支持在 3GL 语言(如 C# 等,甚至在其他数据库,如 Oracle PL/SQL.

Processing the results in a server side stored procedure that uses a cursor might not be an optimal solution especially as MySQL doesnt have support for things such arrays and complex data structures that are readily available in 3GL languages such as C# etc or even in other databases such as Oracle PL/SQL.

所以这里的想法是将成批的数据返回到应用层(C# 无论如何),然后应用层可以将结果添加到基于集合的数据结构中,然后在内部处理数据.

So the idea here is to return batches of data to an application layer (C# whatever) which can then add the results to a collection based data structure and then process the data internally.

存储过程

存储过程需要 3 个参数 rc、df_low 和 df_high,它允许您选择数据范围如下:

The stored procedure takes 3 paramaters rc, df_low and df_high which allows you to select a range of data as follows:

call list_results_innodb(0,1,1); -- df 1

call list_results_innodb(0,1,10); -- df between 1 and 10

call list_results_innodb(0,60,120); -- df between 60 and 120 etc...

显然 df 范围越高,您提取的数据就越多.

obviously the higher the df range the more data you'll be extracting.

drop procedure if exists list_results_innodb;

delimiter #

create procedure list_results_innodb

(

in p_rc tinyint unsigned,

in p_df_low int unsigned,

in p_df_high int unsigned

)

begin

select rc, df, id from results_innodb where rc = p_rc and df between p_df_low and p_df_high;

end #

delimiter ;

我也敲了一个 myisam 版本,除了使用的表之外,它是相同的.

I also knocked up a myisam version also which is identical except for the table that is used.

call list_results_1mregr_c_ew_f(0,1,1);

call list_results_1mregr_c_ew_f(0,1,10);

call list_results_1mregr_c_ew_f(0,60,120);

基于上面的光标示例,我希望 innodb 版本的性能优于 myisam 版本.

Based on the cursor example above I would expect the innodb version to out-perform the myisam one.

我开发了一个又快又脏的多线程 C# 应用程序,它将调用存储过程并将结果添加到集合中以进行查询后处理.您不必使用线程,同样的批量查询方法可以按顺序完成,而不会损失太多性能.

I devloped a quick and dirty multi-threaded C# application that will call the stored procedure and add the results to a collection for post query processing. You dont have to use threads, the same batched query approach could be done sequentially without much loss of performance.

每个线程 (QueryThread) 选择一个范围的 df 数据,循环结果集并将每个结果(行)添加到结果集合中.

Each thread (QueryThread) selects a range of df data, loops the resultset and adds each result (row) to the results collection.

class Program

{

static void Main(string[] args)

{

const int MAX_THREADS = 12;

const int MAX_RC = 120;

List<AutoResetEvent> signals = new List<AutoResetEvent>();

ResultDictionary results = new ResultDictionary(); // thread safe collection

DateTime startTime = DateTime.Now;

int step = (int)Math.Ceiling((double)MAX_RC / MAX_THREADS) -1;

int start = 1, end = 0;

for (int i = 0; i < MAX_THREADS; i++){

end = (i == MAX_THREADS - 1) ? MAX_RC : end + step;

signals.Add(new AutoResetEvent(false));

QueryThread st = new QueryThread(i,signals[i],results,0,start,end);

start = end + 1;

}

WaitHandle.WaitAll(signals.ToArray());

TimeSpan runTime = DateTime.Now - startTime;

Console.WriteLine("{0} results fetched and looped in {1} secs\nPress any key", results.Count, runTime.ToString());

Console.ReadKey();

}

}

运行时观察如下:

Thread 04 done - 31580517

Thread 06 done - 44313475

Thread 07 done - 45776055

Thread 03 done - 46292196

Thread 00 done - 47008566

Thread 10 done - 47910554

Thread 02 done - 48194632

Thread 09 done - 48201782

Thread 05 done - 48253744

Thread 08 done - 48332639

Thread 01 done - 48496235

Thread 11 done - 50000000

50000000 results fetched and looped in 00:00:55.5731786 secs

Press any key

因此在 60 秒内提取了 5000 万行并将其添加到集合中.

So 50 million rows fetched and added to a collection in under 60 seconds.

我使用 myisam 存储过程尝试了同样的事情,该过程需要 2 分钟才能完成.

I tried the same thing using the myisam stored procedure which took 2 minutes to complete.

50000000 results fetched and looped in 00:01:59.2144880 secs

转向innodb

在我的简化系统中,myisam 表的性能并不差,因此可能不值得迁移到 innodb.如果您决定将结果数据复制到 innodb 表中,请按以下方式操作:

Moving to innodb

In my simplified system the myisam table doesnt perform too badly so it might not be worth migrating to innodb. If you do decided to copy your result data to an innodb table then do it as follows:

start transaction;

insert into results_innodb

select <fields...> from results_1mregr_c_ew_f order by <innodb primary key>;

commit;

在插入和包装整个事务之前通过 innodb PK 对结果进行排序将加快处理速度.

Ordering the result by the innodb PK before inserting and wrapping the whole thing up in a transaction will speed things up.

我希望其中的一些内容会有所帮助.

I hope some of this proves helpful.

祝你好运

这篇关于用于提供大量数据的查询的最佳 MySQL 设置?的文章就介绍到这了,希望我们推荐的答案对大家有所帮助,也希望大家多多支持编程学习网!

本文标题为:用于提供大量数据的查询的最佳 MySQL 设置?

基础教程推荐

- 如何在 CakePHP 3 中实现 INSERT ON DUPLICATE KEY UPDATE aka upsert? 2021-01-01

- while 在触发器内循环以遍历 sql 中表的所有列 2022-01-01

- MySQL根据从其他列分组的值,对两列之间的值进行求和 2022-01-01

- CHECKSUM 和 CHECKSUM_AGG:算法是什么? 2021-01-01

- MySQL 5.7参照时间戳生成日期列 2022-01-01

- 使用 VBS 和注册表来确定安装了哪个版本和 32 位 2021-01-01

- 带更新的 sqlite CTE 2022-01-01

- 带有WHERE子句的LAG()函数 2022-01-01

- 从字符串 TSQL 中获取数字 2021-01-01

- ORA-01830:日期格式图片在转换整个输入字符串之前结束/选择日期查询的总和 2021-01-01