Querying Large Dataset on Join (15+ million rows)(在连接时查询大型数据集(15+ 百万行))

问题描述

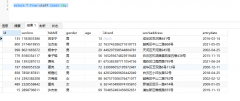

我正在尝试加入两个表,products 和 products_markets.products 不到一百万条记录,而 product_markets 接近 2000 万条记录.数据已更改,因此架构创建表中可能有一两个错字:

I am trying to join two tables, products and products_markets. While products is under a million records, product_markets is closer to 20 million records. The data has been changed so there might be a typo or two in the schema create tables:

CREATE TABLE `products_markets` (

`id` int(10) unsigned NOT NULL AUTO_INCREMENT,

`product_id` int(10) unsigned NOT NULL,

`country_code_id` int(10) unsigned NOT NULL,

`created_at` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

`updated_at` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (`id`),

UNIQUE KEY `unique_index` (`product_id`,`country_code_id`)

) ENGINE=InnoDB AUTO_INCREMENT=21052102 DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_unicode_ci;

CREATE TABLE `products` (

`id` int(10) unsigned NOT NULL AUTO_INCREMENT,

`manufacturer_id` int(10) unsigned NOT NULL,

`department_id` int(10) unsigned NOT NULL,

`code` varchar(100) COLLATE utf8mb4_unicode_ci NOT NULL,

`popularity` int(11) DEFAULT NULL,

`name` varchar(255) COLLATE utf8mb4_unicode_ci NOT NULL,

`value` bigint(20) unsigned NOT NULL,

`created_at` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

`updated_at` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (`id`),

UNIQUE KEY `products_code_unique` (`code`),

KEY `products_department_id_foreign` (`department_id`),

KEY `products_manufacturer_id_foreign` (`manufacturer_id`),

CONSTRAINT `products_department_id_foreign`

FOREIGN KEY (`department_id`) REFERENCES `departments` (`id`),

CONSTRAINT `products_manufacturer_id_foreign`

FOREIGN KEY (`manufacturer_id`) REFERENCES `manufacturers` (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=731563 DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_unicode_ci;

我正在尝试返回 50 条特定国家/地区最受欢迎产品的记录,我遇到的时间约为 50 秒,这似乎比预期的要长.

I am trying to return 50 records of the most popular products available in a specific country and I am running into times around ~50 seconds which seems higher than expected.

我尝试了几个不同的查询,但没有成功:

I've tried a few different queries with no success:

select `products_markets`.`product_id`

from products_markets

left join

( SELECT products.id, products.popularity

from products

) p ON p.id = products_markets.product_id

where products_markets.country_code_id = 121

order by `popularity` desc, `p`.`id` asc

limit 50

和

select `products`.*

from `products`

where products.id in (

SELECT product_id

from products_markets

where products_markets.country_code_id = 121

)

group by `products`.`name`, `products`.`manufacturer_id`

order by `popularity` desc, `products`.`id` asc

limit 50

这个查询的解释是:

id select_type table type possible_keys key key_len refs rows extra

1 PRIMARY products ALL PRIMARY NULL NULL NULL 623848 Using temporary; Using filesort

1 PRIMARY products_markets ref unique_index unique_index 4 main.products.id 14 Using where; Using index; FirstMatch(products)

我喜欢的一个选项是将 products_markets 拆分为每个国家/地区的单独表以减少查询.我尝试向服务器添加更多内存但没有取得多大成功.任何人都可以识别出数据库设计/查询有什么明显错误吗?

One option I am entertaining is splitting up products_markets into individual tables for each country to lessen the query. I've tried adding more memory to the server without much success. Can anyone identify anything glaringly wrong with the database design/query?

还有哪些其他选项可以使这个查询只占当前约 50 秒的一小部分?

What other options are available to make this query a fraction of its current ~50 seconds?

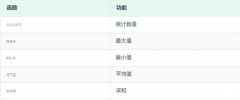

推荐答案

去掉products_markets中的id并添加

PRIMARY KEY(country_code_id, product_id)

然后去掉 UNIQUE 键,除非其他查询需要它.

Then get rid of the UNIQUE key unless it is needed for some other query.

这将显着缩小该大表的磁盘占用空间,从而可能加快所有涉及它的查询.

This will shrink the disk footprint of that large table significantly, thereby potentially speeding up all queries touching it.

这将有助于 Hamaza 建议的重新制定.

And it will help with Hamaza's suggested reformulation.

这篇关于在连接时查询大型数据集(15+ 百万行)的文章就介绍到这了,希望我们推荐的答案对大家有所帮助,也希望大家多多支持编程学习网!

本文标题为:在连接时查询大型数据集(15+ 百万行)

基础教程推荐

- while 在触发器内循环以遍历 sql 中表的所有列 2022-01-01

- 如何在 CakePHP 3 中实现 INSERT ON DUPLICATE KEY UPDATE aka upsert? 2021-01-01

- CHECKSUM 和 CHECKSUM_AGG:算法是什么? 2021-01-01

- MySQL 5.7参照时间戳生成日期列 2022-01-01

- 带更新的 sqlite CTE 2022-01-01

- MySQL根据从其他列分组的值,对两列之间的值进行求和 2022-01-01

- 从字符串 TSQL 中获取数字 2021-01-01

- 带有WHERE子句的LAG()函数 2022-01-01

- ORA-01830:日期格式图片在转换整个输入字符串之前结束/选择日期查询的总和 2021-01-01

- 使用 VBS 和注册表来确定安装了哪个版本和 32 位 2021-01-01